Homer: There's three ways to do things. The right way, the wrong way, and the Max Power way!

Bart: Isn't that the wrong way?

Homer: Yeah. But faster!

- "Homer to the Max"

Every few months, I try to talk someone down from building a real-time product analytics system. When I’m lucky, I can get to them early.

The turnaround time for most of the web analysis done at Etsy is at least 24 hours. This a ranking source of grousing. Decreasing this interval is periodically raised as a priority, either by engineers itching for a challenge or by others hoping to make decisions more rapidly. There are companies out there selling instant usage numbers, so why can’t we have them?

Here’s an excerpt from a manifesto demanding the construction of such a system. This was written several years ago by an otherwise brilliant individual, whom I respect. I have made a few omissions for brevity.

We believe in…

- Timeliness. I want the data to be at most 5 minutes old. So this is a near-real-time system.

- Comprehensiveness. No sampling. Complete data sets.

- Accuracy (how precise the data is). Everything should be accurate.

- Accessibility. Getting to meaningful data in Google Analytics is awful. To start with it’s all 12 - 24 hours old, and this is a huge impediment to insight & action.

- Performance. Most reports / dashboards should render in under 5 seconds.

- Durability. Keep all stats for all time. I know this can get rather tough, but it’s just text.

The 23-year-old programmer inside of me is salivating at the idea of building this. The burned out 27-year-old programmer inside of me is busy writing an email about how all of these demands, taken together, probably violate the CAP theorem somehow and also, hey, did you know that accuracy and precision are different?

But the 33-year-old programmer (who has long since beaten those demons into a bloody submission) sees the difficulty as irrelevant at best. Real-time analytics are undesirable. While there are many things wrong with our infrastructure, I would argue that the waiting is not one of those things.

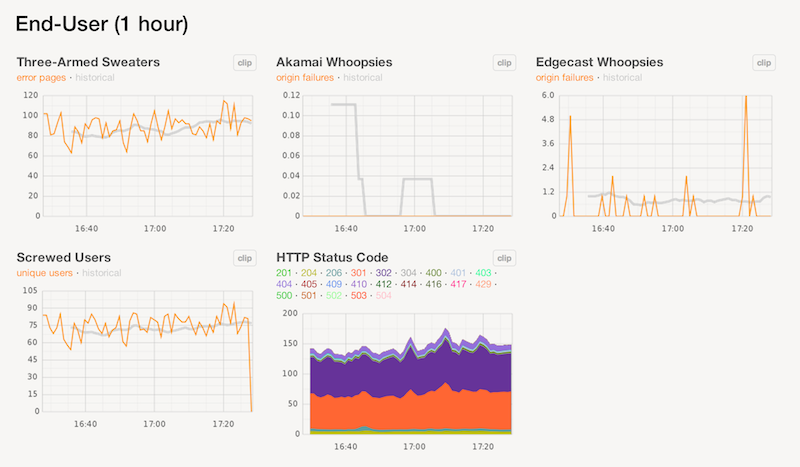

Engineers might find this assertion more puzzling than most. I am sympathetic to this mindset, and I can understand why engineers are predisposed to see instantaneous A/B statistics as self-evidently positive. We monitor everything about our site in real time. Real-time metrics and graphing are the key to deploying 40 times daily with relative impunity. Measure anything, measure everything!

This line of thinking is a trap. It’s important to divorce the concepts of operational metrics and product analytics. Confusing how we do things with how we decide which things to do is a fatal mistake.

So what is it that makes product analysis different? Well, there are many ways to screw yourself with real-time analytics. I will endeavor to list a few.

The first and most fundamental way is to disregard statistical significance testing entirely. This is a rookie mistake, but it’s one that’s made all of the time. Let’s say you’re testing a text change for a link on your website. Being an impatient person, you decide to do this over the course of an hour. You observe that 20 people in bucket A clicked, but 30 in bucket B clicked. Satisfied, and eager to move on, you choose bucket B. There are probably thousands of people doing this right now, and they’re getting away with it.

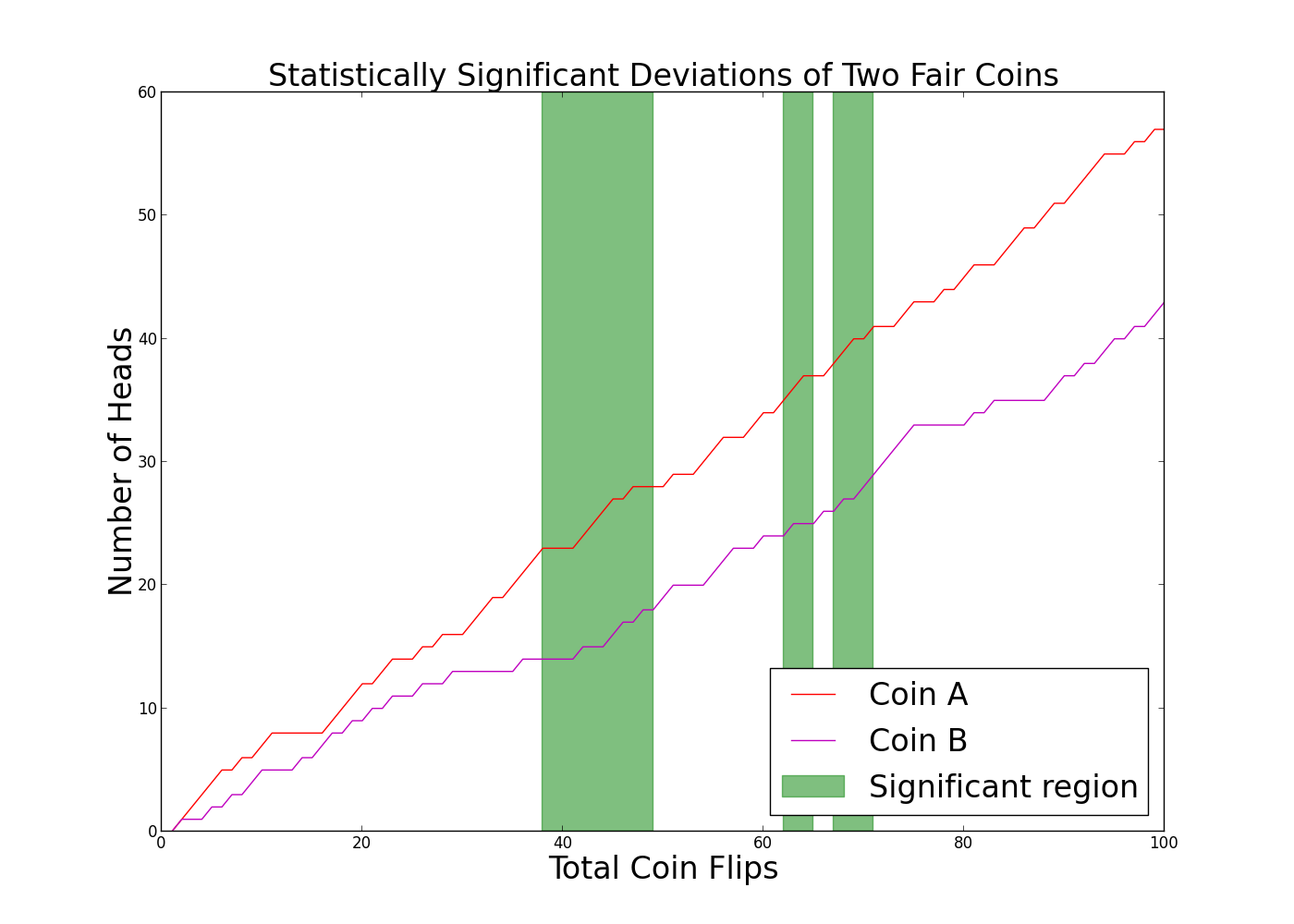

This is a mistake because there’s no measurement of how likely it is that the observation (20 clicks vs. 30 clicks) was due to chance. Suppose that we weren’t measuring text on hyperlinks, but instead we were measuring two quarters to see if there was any difference between the two when flipped. As we flip, we could see a large gap between the number of heads received with either quarter. But since we’re talking about quarters, it’s more natural to suspect that that difference might be due to chance. Significance testing lets us ascertain how likely it is that this is the case.

A subtler error is to do significance testing, but to halt the experiment as soon as significance is measured. This is always a bad idea, and the problem is exacerbated by trying to make decisions far too quickly. Funny business with timeframes can coerce most A/B tests into statistical significance.

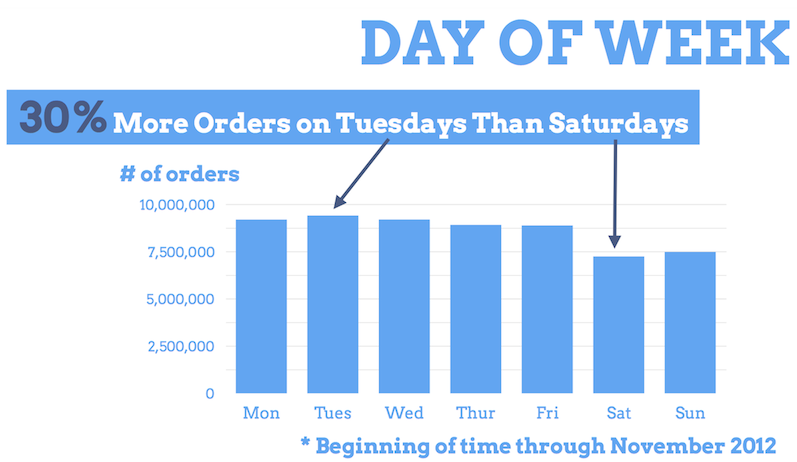

Depending on the change that’s being made, making any decision based on a single day of data could be ill-conceived. Even if you think you have plenty of data, it’s not farfetched to imagine that user behavior has its own rhythms. A conspicuous (if basic) example of this is that Etsy sees 30% more orders on Tuesdays than it does on Sundays.

While the sale count itself might not skew a random test, user demographics could be different day over day. Or very likely, you could see a major difference in user behavior immediately upon releasing a change, only to watch it evaporate as users learn to use new functionality. Given all of these concerns, the conservative and reasonable stance is to only consider tests that last a few days or more.

One could certainly have a real-time analytics system without making any of these mistakes. (To be clear, I find this unlikely. Idle hands stoked by a stream of numbers are the devil’s playthings.) But unless the intention is to make decisions with this data, one might wonder what the purpose of such a system could possibly be. Wasting the effort to erect complexity for which there is no use case is perhaps the worst of all of these possible pitfalls.

For all of these reasons I’ve come to view delayed analytics as positive. The turnaround time also imposes a welcome pressure on experimental design. People are more likely to think carefully about how their controls work and how they set up their measurements when there’s no promise of immediate feedback.

Real-time web analytics is a seductive concept. It appeals to our desire for instant gratification. But the truth is that there are very few product decisions that can be made in real time, if there are any at all. Analysis is difficult enough already, without attempting to do it at speed.