I was once oblivious to A/B testing.

My first several years out of college were spent building a financial data website. The product, and the company, were run by salesmen. Subscribers paid tens of thousands of dollars per seat to use our software. That entitled them to on-site training and, in some cases, direct input on product decisions. We did giant releases that often required years to complete, and one by one we were ground to bits by long stretches of hundred-hour weeks.

Whatever I might think of this as a worthwhile human endeavor generally, as a business model it was on solid footing. And experimental rigor belonged nowhere near it. For one thing, design was completely beside the point: in most cases the users and those making the purchasing decisions weren’t the same people. Purchases were determined by a comparison of our feature set to that of a competitor’s. The price point implied that training in person would smooth over any usability issues. Eventually, I freaked out and moved to Brooklyn.

When I got to Etsy in 2007, experimentation wasn’t something that was done. Although I had some awareness that the consumer web is different animal, the degree to which this is true was lost on me at the time. So when I found the development model to be the same, I wasn’t appropriately surprised. In retrospect, I still wouldn’t rank waterfall methodology (with its inherent lack of iteration and measurement) in the top twenty strangest things happening at Etsy in the early days. So it would be really out of place to fault anyone for it.

So anyway, in my first few years at Etsy the releases went as follows. We would plan something ambitious. We’d spend a lot of time (generally way too long, but that’s another story) building that thing (or some random other thing; again, another story). Eventually it’d be released. We’d talk about the release in our all-hands meeting, at which point there would be applause. We’d move on to other things. Etsy would do generally well, more than doubling in sales year over year. And then after about two years or so we would turn off that feature. And nothing bad would happen.

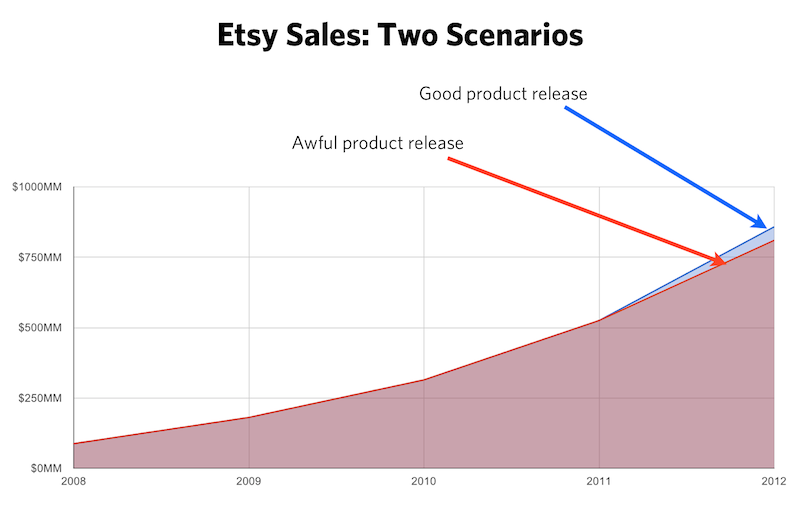

Some discussion about why this was possible is warranted. The short answer is that this could happen because Etsy’s growth was an externality. This is still true today, in 2013. We have somewhere north of 800,000 sellers, thousands of whom are probably attending craft fairs as we speak and promoting themselves. And also, our site. We’re lucky, but any site experiencing growth is probably in a similar situation: there’s a core feature set that is working for you. Cool. This subsidizes anything else you wish to do, and if you aren’t thinking about things very hard you will attribute the growth to whatever you did most recently. It’s easy to declare yourself to be a genius in this situation and call it a day. The status quo in our working lives is to confuse effort with progress.

But I had stuck around at Etsy long enough to see behind the curtain. Eventually, the support tickets for celebrated features would reach critical mass, and someone would try to figure out if they were even worth the time. For a shockingly large percentage, the answer to this was “no.” And usually, I had something to do with those features.

I had cut my teeth at one job that I considered to be meaningless. And although I viewed Etsy’s work to be extremely meaningful, as I still do, I couldn’t suppress the idea that I wasn’t the making the most of my labor. Even if the situation allowed for it, I did not want to be deluded about the importance and the effectiveness of my life’s work.

Measurement is the way out of this. When growth is an externality, controlled experiments are the only way to distinguish a good release from a bad one. But to measure is to risk overturning the apple cart: it introduces the possibility of work being acknowledged as a regrettable waste of time. (Some personalities you may encounter will not want to test purely for this reason. But not, in my experience, the kind of personalities that wind up being engineers.)

Through my own experimentation, I have uncovered a secret that makes this confrontation palatable. Here it is: nearly everything fails. As I have measured the features I’ve built, it’s been humbling to realize how rare it is for them to succeed on the first attempt. I strongly suspect that this experience is universal, but it is not universally recognized or acknowledged.

If someone claims success without measurement from an experiment, odds are pretty good that they are mistaken. Experimentation is the only way to seperate reality from the noise, and to learn. And the only way to make progress is to incorporate the presumption of failure into the process.

Don’t spend six months building something if you can divide it into smaller, measurable pieces. The six month version will probably fail. Because everything fails. When it does, you will have six months of changes to untangle if you want to determine which parts work and which parts don’t. Small steps that are validated not to fail and that build on one another are the best way, short of luck, to actually accomplish our highest ambitions.

To paraphrase Marx: the demand to give up illusions is the demand to give up the conditions that require illusions. I don’t ask people to test because I want them to see how badly they are failing. I ask them to test so that they can stop failing.